Linear Systems of Equations

3/12 in Linear Algebra. See all.

Linear Systems of Equations (SLEs)

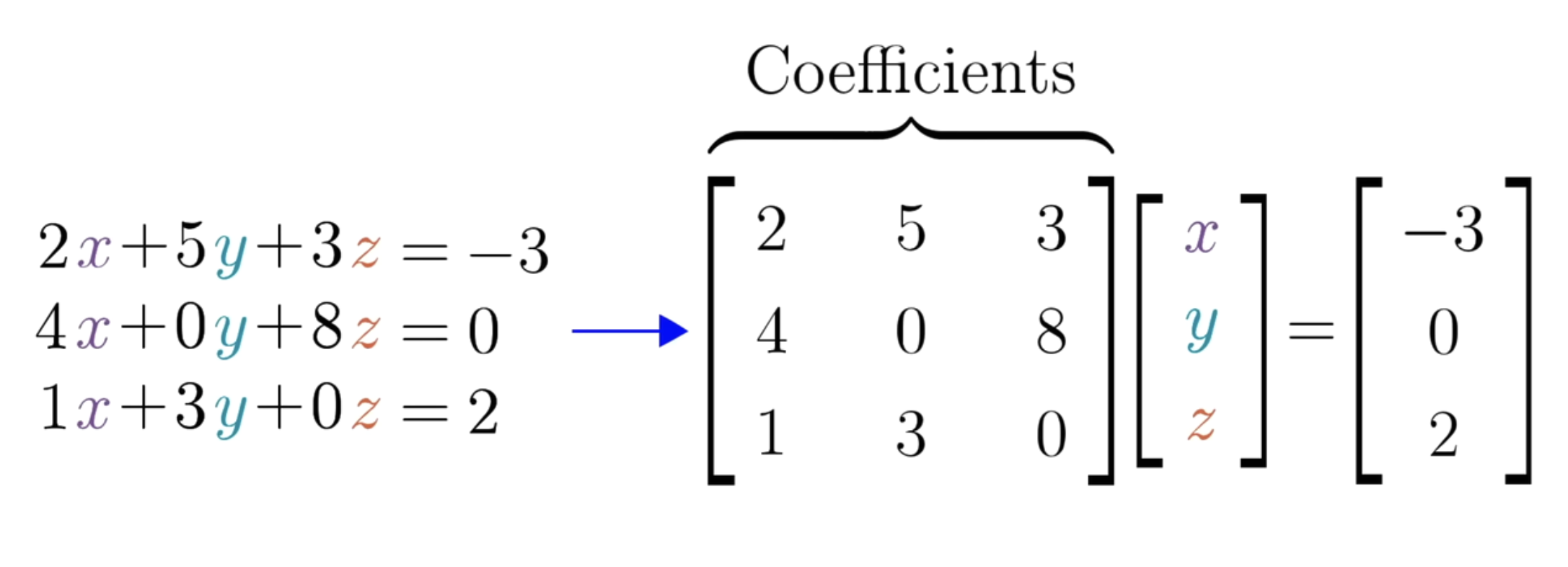

A system of equations (you know this), where every term is a

scalar multiple of a variable. Then, each equation models a

linear combination of the variables.

Credit to 3Blue1Brown.

Credit to 3Blue1Brown.

This can be written as

First, let's note that a system of linear equations will have either zero, one, or infinitely many solutions:

Theorem: Suppose

Here are some important terms we need before we can solve SLEs:

Consistent

A linear system of equations is consistent if

it has at least one solution. Otherwise, it is inconsistent.

Homogenous

A system of linear equations corresponding the matrix equation

Row-Reduced Form

A matrix is in row-reduced form if it satisfies the following

four conditions:

- All zero rows (if any) appear below all nonzero rows.

-

The first nonzero element in any nonzero row is

-

All elements below and in the same column as the first nonzero

element in any nonzero row are

- The first nonzero element of any nonzero row appears in a column further (farther??) to the right than the first nonzero element in any preceding row.

Ex.

Pivot

If a matrix is in row-echelon form, then the first nonzero

element of each row is called a pivot, and a

column in which a pivot appears is called a

pivot column. In a system of linear equations,

the pivot columns represent basic variables.

Non-basic variables (ones that are not in a pivot column) are

called free variables. Free variables can take

on any value (we are "free" to choose any value)

Now we're ready to solve SLEs!

The first thing we need to do is form an augmented matrix. This is a matrix that has the coefficients of the variables on the left and the constants on the right. For example, the augmented matrix of the system

is

Once we've constructed this augmented matrix, we can use Gaussian elimination to row-reduce it. This involves using elementary row operations to transform the augmented matrix into row-reduced form.

Guassian Elimination

First, note that the following three operations do not change

the set of solutions:

-

-

-

These are called elementary row operations.

We'll talk more about them in a later section.

The augmented matrix of the system

- Write the system of equations as an augmented matrix.

- Use elementary row operations to transform the augmented matrix into row-reduced form.

- Write the equations corresponding to the reduced augmented matrix.

- Solve the new set of equations with back-substitution.

Here are some general guidelines for working with elementary row operations:

- Completely transform one column into the required form before starting on another.

- Work the columns in order from left to right.

-

Never use an operation that changes a

If the number

Once we've row-reduced the augmented matrix, we can write the

system of equations corresponding to the reduced augmented

matrix. We can then solve this system of equations using

back-substitution. For example:

The augmented matrix is

The row-reduced form of the augmented matrix is

The corresponding system of equations is

The last equation is a trivial equation and doesn't provide any new information. So, we can ignore it. We can then solve the remaining two equations using back-substitution.

Elementary Matrices

An elementary matrix

So, the elementary matrix that corresponds to multiplying the

first row of an

LU Decomposition

If a nonsingular (invertible) matrix